Export or forward-engineer

In the previous tutorial, we reviewed how to import or reverse-engineer artifacts from your data model. By the end of this tutorial, you will master exporting or forward-engineering of schemas and order artifacts for your data models.

You may also view this tutorial on YouTube. Summary slides can be found here.

Hackolade Studio is a data modeling tool with a purpose. We recognize that it is easier for humans to understand data structures via graphical Entity-Relationship Diagrams. The diagrams make it easy to design, maintain, and discuss with application stakeholders. But data models are not an end in themselves. Data models are useful so we can produce schemas, which we define as "contracts" between producers and consumers of data so they understand each other unambiguously during exchanges. Data models are also useful to feed business-facing data dictionaries so data citizens can understand the meaning and context of data when using it in self-service analytics, machine-learning, artificial intelligence, etc... In other words, a data model is an important mean to an end.

As the data model is not an end and of itself, we need to use data models as a basis for exports through forward-engineering.

Hackolade supports the forward-engineering of different types of artifacts:

- a JSON or YAML sample document;

- a JSON Schema or YAML Schema, to be saved on the file system (local or network) or to be applied to schema registries;

- an Excel file to perform bulk updates on a data model and import back into it;

- a model-driven API file in Swagger or OpenAPI specification, generated from the underlying data model;

- a publication of the data model to data dictionaries, currently Collibra.

Additionally, and most importantly, we forward-engineer the respective schema creation script for each target we support: DDL for RDBMS, dbCreateCollection script for MongoDB, CQL for Cassandra/ScyllaDB, Cypher for Neo4j, etc., including constraints, indexing, and other necessary commands.

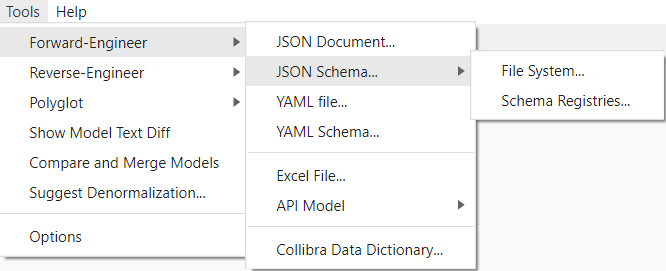

For the JSON target, the menu looks as shown below. The menu is dynamically adapted in other targets to reflect the available capabilities of each plugin:

Exporting file-based artifacts

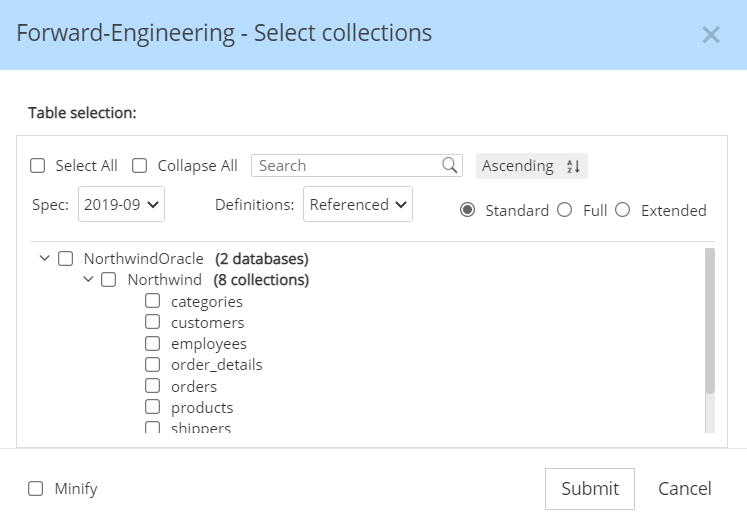

For file-based exports, you are first prompted to select the entities you wish to forward-engineer, plus various options which differ depending on the type of export:

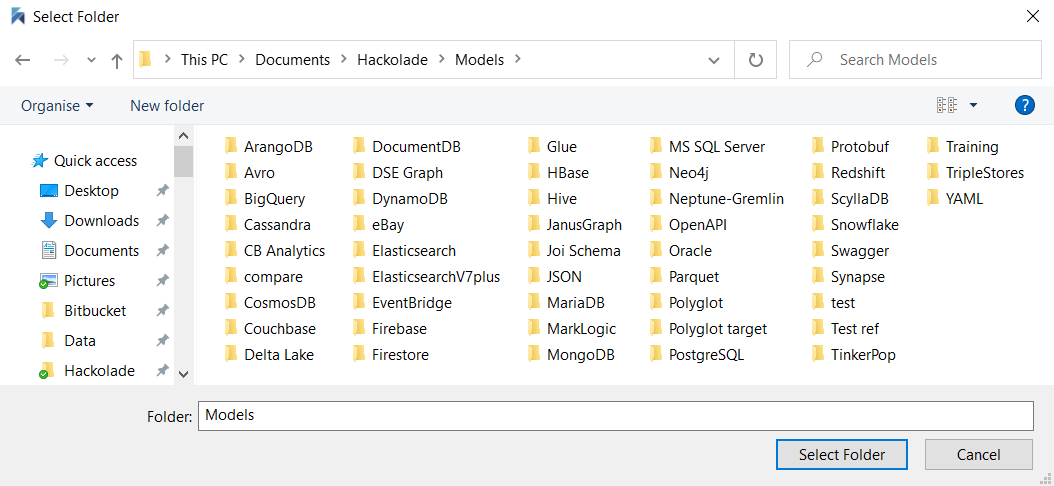

Then you are prompted with the OS-specific dialog to save in the folder of your choice, for example in Windows:

Exporting to instances and systems

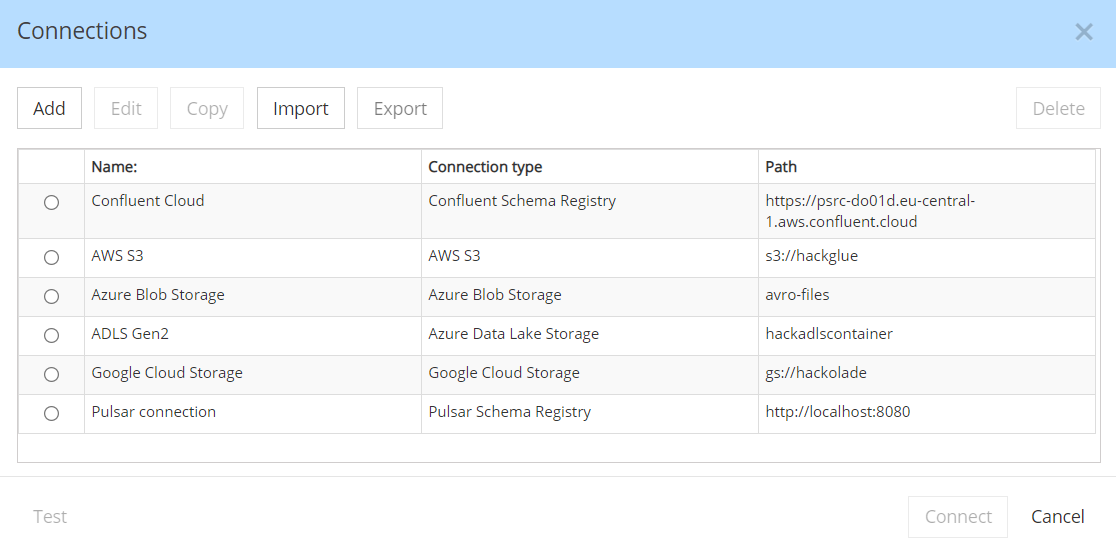

When applying scripts to database instances, applying schemas to registries, or publishing data models to data dictionaries, whether you forward-engineer to on-prem or cloud instances, you must provide connection parameters and security credentials. This is done via a Connections dialog such as:

This confidential information stays on your local system with encrypted passwords. With this screen you can connect to previously saved connections, as well as add, edit, copy, import, export, and delete connections.

The connection settings differ for each target, depending on the respective protocols and authentication mechanisms of each target technology.

All forward-engineering functions are accessible either from the GUI application, or from the Command-Line Interface, whether directly or running in Docker containers.

In this tutorial, we reviewed how to export or forward-engineer schemas and order artifacts for your data models. In the next tutorial, we will cover how to generate documentation and diagrams for your data model.